Sap Install New License Key

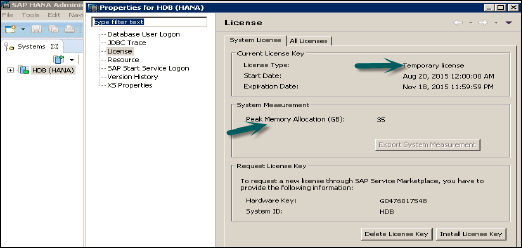

SAP ERP is enterprise resource planning software developed by the German company SAP SE. SAP ERP incorporates the key business functions of an organization. Mar 11, 2015. Once you have your license key, save it on the destination machine. In the SAP License Administration (Transaction SLICENSE) right-click the 'Digitally-Signed Licenses' tab and choose 'Install License'. Navigate to your license key and import it into the system. If all goes well, you should see following.

This article has been nominated to be checked for its. Discussion of this nomination can be found on the. (December 2017) () ERP Written in,, Website SAP ERP is software developed by the German company. SAP ERP incorporates the key business functions of an organization. The latest version (SAP ERP 6.0) was made available in 2006. The most recent Enhancement Package (EHP8) for SAP ERP 6.0 was released in 2016.

Business Processes included in SAP ERP are Operations ( &,,, Execution, and ), Financials (,, Financial Supply Chain Management), (,, e-Recruiting) and (,, and Management). Contents • • • • • • • • • • Development [ ] An ERP was built based on the former software. SAP R/3, which was officially launched on 6 July 1992, consisted of various applications on top of SAP Basis, SAP's set of programs and tools.

All applications were built on top of the. Extension sets were used to deliver new features and keep the core as stable as possible. The Web Application Server contained all the capabilities of SAP Basis. A complete architecture change took place with the introduction of mySAP ERP in 2004.

R/3 Enterprise was replaced with the introduction of ERP Central Component (SAP ECC). The SAP Business Warehouse, SAP Strategic Enterprise Management and Internet Transaction Server were also merged into SAP ECC, allowing users to run them under one instance. The SAP Web Application Server was wrapped into SAP, which was introduced in 2003. Architectural changes were also made to support an enterprise service architecture to transition customers to a. The latest version, SAP ERP 6.0, was released in 2006. SAP ERP 6.0 has since then been updated through SAP enhancement packs, the most recent: SAP enhancement package 8 for SAP ERP 6.0 in 2016.

Implementation [ ] SAP ERP consists of several modules, including Financial Accounting (FI), Controlling (CO), Asset Accounting (AA), Sales & Distribution (SD), Material Management (MM), Product Planning (PP), Quality Management (QM), Project System (PS), Plant Maintenance (PM), Human Resources (HR). SAP ERP collects and combines data from the separate modules to provide the company or organization with enterprise resource planning. Typical implementation phases: • Phase 1 - Project Preparation • Phase 2 - Business Blueprint • Phase 3 - Realization • Phase 4 - Final Preparation • Phase 5 - Golive Support Companies planning to implement or upgrade an SAP ERP system should pay strict attention to system integration to save their SAP ERP implementation from failure. With system integration in place, data flows move completely and correctly among various SAP ERP components, thereby not only streamlining business processes but also eliminating or minimizing redundant data entry efforts.

Analyst firm estimates that 55% to 75% of all ERP projects fail to meet their objectivesOf the top 10 barriers to a successful ERP journey, 5 can be addressed by developing and implementing a structured change management program. Deployment and maintenance costs [ ] Effectively implemented SAP ERP systems may have cost benefits: • Reduced level of inventory through improved planning and control. • Improved production efficiency which minimizes shortages and interruptions. • Reduced materials cost through improved procurement and payment protocols. • Reduced labor cost through better allocation of staff and reduced overtime.

• Increased sales revenue, driven by better managed customer relationships. • Increased gross margin percentage.

• Reduced administrative costs. • Reduced regulatory compliance costs. Integration is the key in this process.

'Generally, a company's level of data integration is highest when the company uses one vendor to supply all of its modules.' An software package has some level of integration but it depends on the expertise of the company to install the system and how the package allows the users to integrate the different modules. It is estimated that 'for a Fortune 500 company, software, hardware, and consulting costs can easily exceed $100 million (around $50 million to $500 million).

Large companies can also spend $50 million to $100 million on upgrades. Full implementation of all modules can take years,' which also adds to the end price. Midsized companies (fewer than 1,000 employees) are more likely to spend around $10 million to $20 million at most, and small companies are not likely to have the need for a fully integrated SAP ERP system unless they have the likelihood of becoming midsized and then the same data applies as would a midsized company. Independent studies have shown that deployment and maintenance costs of a SAP solution can greatly vary depending on the organization. For example, some point out that because of the rigid model imposed by SAP tools, a lot of customization code to adapt to the business process may have to be developed and maintained.

Some others pointed out that a could only be obtained when there was both a sufficient number of users and sufficient frequency of use. Deploying SAP itself can also involve a lot of time and resources. SAP Transport Management System [ ] SAP Transport Management System (TMS) is a tool within systems to manage software updates, termed, on one or more connected SAP systems. This should not be confused with SAP Transportation Management, a stand-alone module for facilitating logistics and supply chain management in the transportation of goods and materials. • Lextrait, Vincent (January 2010).. Retrieved 14 March 2010. Retrieved 2017-03-21.

• Boeder, Jochen; Groene, Bernhard (2014-03-06).. • Darmawan, Budi; Dvorak, Miroslav; Harnal, Dhruv; Murugan, Rennad; Silva, Marcos (2009). Retrieved 2017-02-15. Retrieved 2017-02-15. Retrieved 2017-02-15. INDUSA Technical Corp.

- Software Outsourcing Microsoft Partner Company Software Solutions. Retrieved 2017-03-21. • ^ Monk, Ellen F.; Wagner, Brej J. Concepts in enterprise resource planning (3rd ed.).

Boston: Thomson Course Technology. • Everett, Cath (2008-02-13).. Retrieved 2009-03-08. Around 90 percent of European SAP customers could save six- or seven quid each year by avoiding the creation of bespoke code on top of the ERP platform, an IT consultant has claimed • Vance, Ashlee (2003-03-31).. UK: The Register. Retrieved 2009-03-08. Nucleus Research.

Retrieved 2009-03-08. Customers will see benefits after lengthy implementations, but many deployments anchored down by excessive consulting costs •. USA: Fingent. Retrieved 2015-11-03. • Harvey, Tesha; Schattka, Karin (15 December 2009).. SAP Community WIKI. Retrieved 2 August 2017.

• Naveh, Moshe (24 December 2014).. SAP Community WIKI. Retrieved 2 August 2017. • Bui, Vi (11 January 2006)..

Retrieved 2 August 2017. • Oehler, Christian; Weiss, Thomas (15 December 2009).. Retrieved 2 August 2017. • Thomas, Weiss; Christian, Oehler (1 January 2009)..

Retrieved 2 August 2017. • Rau, Sabine (13 April 2016).. Retrieved 2 August 2017.

• Gargeya, VB 2005, ‘Success and failure factors of adopting SAP in ERP system implementation’, Business Process Management Journal, Vol.11, No.5, pp501–516, Retrieved. • In White Paper Review, Industry Week OCT 2009, ‘ERP Best Practices: The SaaS Difference, Plex Systems, Retrieved. • Malhorta, A & Temponi, C 2010, ‘Critical decisions for ERP integration: Small business issues’, International Journal of Information Management, Vol. 30, Issue No.1, Pages 28–37,, Science Direct. External links [ ] •.

SAP BusinessObjects 4.2 SP4 offers numerous new features and many of these features have been anticipated for years. Below is just a sampling of all the great new enhancements found in SAP BusinessObjects 4.2 SP4. • The Web Intelligence DHTML designer can realistically now replace the Java Applet Designer. You can again create WebI reports with Google chrome and without the need to deploy an Oracle JRE. • There is a new optional HTML 5 (Fiori Style) BI LaunchPad and Web Intelligence viewer.

• There is a new CMS Database Driver for metadata reporting against the CMS database. I always thought it was odd that you could not generate reports based on metadata generated by a BI reporting platform.

• You can create Run-Time Server groups and they can be assigned to folders, users and security groups. This allows you to setup dedicated “server nodes” to process workloads. • New Virus Scan integrated support with the FRS Services. • A User Accounts status icons now appear when listing user accounts in the CMC. Quickly identify disabled user accounts. • Administrators can now limited the number on Inbox items. Just like with other folders, the system will delete the oldest documents when the limit is exceeded.

• The Promotion Management (LCM) Command Line now allows you to define a batchJobQuery parameter. The query in this parameter acts like a cursor for the ExportQuery parameter.

For each row returned by the Batch Job Query, the ExportQuery a value can be passed to the Export Query via a place holder. • Update the platform from a combined support package and patch stack. You no longer need to first apply a support pack and then apply its patch. This might be the most important enhancement of the group given the amount of time it saves. See for yourself all of the enhancements available in 4.2 SP4: Be cautious when upgrading! While many of these enhancements are outstanding, there are a few issues related to performing an in-place upgrade on the Microsoft Windows OS.

Specifically, SAP included an upgraded Windows OS installation compiler with the 4.2 SP4 media. The “compiler upgrade” impacts both historic patches and add-ons within the platform. Add-ons such as Lumira, Design Studio, AAOffice and Explorer are all impacted by this change. Explains the impact in further detail. The short version of the story is that you might want to consider deploying a fresh BOE 4.2 SP4 environment and then moving your existing content to it. Because the compiler was upgraded, you will not be able to remove prior patches, repair installations or remove add-ons. This assumes that you are performing an in-place upgrade without first removing all of the historic patches and add-ons.

I do not recommend that you attempt to remove all of the historic patch stacks or add-ons because historically that can create more problems than it solves. In my experience, the 4.2 SP4 upgrade was very problematic. I had an issue with the Lumira and Design Studio plugin after the upgrade and the resolution was to run an installation repair. Unfortunately, the repair no longer worked after upgrading to 4.2 SP4. To resolve, I had to manually backup the CMS DB, FRS and configuration files and then uninstall the software using the steps outlined in. I then reinstalled from the base 4.2 SP4 full installer and recovered the DB, FRS and configuration files. I then installed compatible plug-ins using as a guide.

Compatible plug-ins use the same compiler as 4.2 SP4 and will not have this issue. However, the upgrade required almost 6 full days because of these unexpected issues. SAP Note 2485676 indicates that you can install a new 4.2 SP4 system side-by-side with your existing 4.x system as a workaround.

It also indicates that you can use the promotion manager GUI to migrate you content. However, as most experienced BOE administrators know, the promotion management GUI is not a scalable tool when you are trying to promote 20,000+ objects between two 4.x systems. With that said, based on enhancements in 4.2 SP4 Promotion Management Command Line Interface (CLI), it might now be a scalable option. Regardless, promotion manager is not the easiest way to promote mass content between two environments. In addition to the recommendations from the SAP note there are a two additional upgrade options that I will outline below. These options prevent the need to use promotion manager. However, they do take time to complete.

You also truly need to be experienced and have a sound backup / restore solution in place. Other options when upgrading to 4.2 SP4 1.) Upgrade the system in-place using the 4.2 SP4 upgrade. Backup for FRS, CMS DB and any configuration files. Manual remove the BOE binaries, installation metadata and registry settings using.

Install BOE 4.2 SP4 using the full media and then restore the FRS and configuration files. In my case, I used the existing CMS and Audit DB during the full install without any impacts. The CMS DB backup is a “just-in-case” step. You can then install compatible plug-ins as needed.

2.) Upgrade the original systems in-place using the 4.2 SP4 upgrade media. Add additional hosts as if you were clustering in additional servers. On each additional host, use the full BOE 4.2 SP4 installer. Move all service operations to the new hosts.

Install compatible plug-ins to each new host as needed. Remove the services and SIA from the original host using the CMC / CCM.

This effectively de-clusters the old systems from the environment and those hosts can be retired. You now have all new systems without any compiler upgrade issues. Of the many notable items that have occurred in the past two years, one is that many SAP customers are now running SAP HANA. I recall being at conferences in 2013 through 2014 and only able to find a few attendees that were actually using a SAP HANA based solution.

That has all changed in 2017. I now find that a large portion of the SAP ecosphere is running something based on SAP HANA. With its popularity and usage on the rise, it is inevitable that SAP HANA security will soon be a top priority for most organizations and auditors. Solutions such as Suite on SAP HANA (SoH) and S4/HANA are becoming more commonplace within organizations. The dream of running ERP and Analytic on the same platform is starting to take shape and this is prompting more organization to grant access directly to the SAP HANA Database.

SAP’s ERP solutions are the lifeblood for many organizations and accordingly they must be secured at all layers. Based on my experience, most organizations have addressed access to the SAP Application Server layer. They might have lingering issues with segregation of duties (SoD), user account maintenance and other items; but one would assume that they have attempted to address it is at some point. However, I do find that many organizations have failed to implement any level of security within their SAP HANA database. They might have secured their SAP Application layer but none of that will matter the second someone perpetrates mass fraud by changing data directly within the SAP application server’s tables. With a few SQL INSERT, UPDATE and DELETE statements, someone could be sending themselves a few $9,000 checks every week.

Regarding SAP HANA security, I often find that organizations share the SYSTEM account and other services accounts used by the SAP Application server with multiple administrators. For example, the entire BASIS team might have the SAP application server’s database user account and password; this account can change data directly in the SAP system tables.

I also find that most organizations grant too many privileges to users simply because they do not understand the SAP HANA security layer. Ignorance should never be an excuse for not implementing a proper SAP HANA security model. In short, many organizations have numerous gaps as it relates to SAP HANA security.

This is further complicated when they attempt to grant direct access to the SAP HANA database. As you can see in the figure below, most modern analytic applications will connect directly to the SAP HANA database via ODBC, JDBC or HTTP. Even some Fiori applications will require direct database access as well.

This is just the tip of the iceberg in my opinion. Securing a SAP HANA system goes well beyond user accounts and privileges.

We must also have a strategy that addresses data encryption, communication encryption, password policies, auditing and monitoring. Cybersecurity is a major issues these days and you have to address all aspect of the SAP HANA security model to have any chance of defending your organization’s data and systems that run on SAP HANA.

With this in mind, I will list out the top five reasons all organization need to address their SAP HANA security model before it’s too late. • Fraud can cause an organization to lose money and their reputation. When a SAP HANA system operates an SAP ERP application server, someone only need INSERT, UPDATE or DELETE access to the SAP ERP tables to commit mass fraud. • When your SAP ERP system is offline, it is hard to conduct business.

Many organizations use their SAP ERP system to its full extent. When this system is offline, most processes within the organization come to a complete stop. So yes, securing your SAP HANA system is also about system availability. An untrained administrator with a high level of privileges can be just as dangerous as a hacker trying to bring down your system. It requires a sound SAP HANA security model, SoD and training to prevent these types of mistakes. • Hackers they like to hack into your systems. Hackers can gain access to your systems and data for a variety of reasons.

Typically, such reasons are never well intended. It’s not like they hack into your system and perform routine maintenance for you. Most likely, they hack into your system to steal information, commit fraud, extortion, denial of service and other malicious acts. Therefore, you have to make it very difficult for them to penetrate your SAP HANA database security. • Compliance means that resistance to implementing SAP HANA security is futile. Most organizations have obligations to external regulatory agencies and they must adhere to their security standards.

SAP HANA is one of many systems that are subject to numerous regulations. Therefore, we need to have a security model and security strategy for all SAP HANA systems. • Access to data must be governed. Almost all SAP HANA solutions involve the storage of master data and transaction data.

Such data often needs to be secured from external access but it also needs to be segregated within the organization. Without a proper security model in place, most organizations have no means of securely distributing data hosted in SAP HANA. To help organizations understand the key aspects of the SAP HANA security model, I recent authored a book titled SAP HANA Security Guide and it is available from SAP-Press and Rheinwerk Publishing. Below is the cover of this book. The book address the following key areas of security and a few other topics: • User Provisioning • Repository Roles • Object Privileges • System Privileges • Package Privileges • Analytic Privileges • Encryption • Auditing and Monitoring • Troubleshooting • Security recommendations You can purchase a hard copy and e-Copy of the book today at: Purchasing form SAP Press is your best way to get the book soon after it is released on 5/24/2017. A hard-copy can also be purchased from Amazon.

Starting with SAP HANA 2.0 we can now partition a single table between in-memory storage and SAP HANA Extended Storage (AKA. Dynamic Tiring). This is an excellent feature because it simplifies the management and code required to manage multiple tables and subsequently bridge them together using additional code.

Basically, we can establishing archiving within our tables without the need to move the data into separate tables. Prior to SAP HANA 2.0, we would have to create two identical tables. One would be provisioned in-memory and the other in extended storage. We then had to create catalog views or SAP HANA Information Views to logically merge the tables together. With HANA 2.0 we no longer have to do this. Lets take a look at a few details and examples: Some Background Info for the example. • A table exists in the schema “BOOK_USER” • The name of the table in the example SQL is: “dft.tables::FACT_SALES_PARTITION” • I am creating a range partition on the column “ORDERYEAR”.

• Some partitions will exists in-memory, others will exist within extended storage. • The “ORDERYEAR” Column is part of the primary key set. • Extended Storage is enabled in the cluster. The execution plans for multistore tables, having partitions stored in both, effectively performs a UNION ALL automatically to bridge (UNION) the data together. As you can see in the following plan visualization, a node called “Materialized Union All” is created to bridge the data from both data store types together. This plan was generated from the query: SELECT * FROM “BOOK_USER”.”dft.tables::FACT_SALES_PARTITION” Adding filters to the query, that directly reference the partition column, seam to aid in the execution.

For example, filtering on our partitioning column “ORDERYEAR” changes the execution and the UNION ALL is not executed. This means the optimizer is smart enough to remove the “Materialized Union All” from the execution because the query did not directly ask for data within the partitions hosted in extended storage. SELECT * FROM “BOOK_USER”.”dft.tables::FACT_SALES_PARTITION” WHERE ORDERYEAR = 2016 If the query does not perform a filter on the column used for the partition, the execution plan will look in extended storage and perform the UNION ALL operation. However, in my example below, the filter finds no matching data within extended storage and moves zero (0) records from the extended storage store into the “Materialized Union All” execution node. Because no data was moved from extended storage and into the “Materialized Union All” node, the execution was slightly faster than the first example.

SELECT * FROM “BOOK_USER”.”dft.tables::FACT_SALES_PARTITION” WHERE ORDERDATEKEY >= 20160101 Given the amount of time it required to find this SQL for this new option within the documentation, I thought I would share a few example SQL statements I used with a test table. The first three SQL statements, creates a partition and then move partitions in and out of extended storage. I also included a CREATE TABLE statement to see how this would be accomplished from scratch. For Existing Tables we need to start by creating a normal range partition.

STEP 1 — Start by Creating all range partitions in STANDARD STORAGE (In-Memory) ALTER TABLE “BOOK_USER”.”dft.tables::FACT_SALES_PARTITION” PARTITION BY RANGE (“ORDERYEAR”) ( USING DEFAULT STORAGE ( PARTITION ‘1900’. This week I had the pleasure of attending the in New Orleans, LA. If you look close enough you can just about see me hanging out on the far end of the Protiviti booth. While I spent, most of my time in the exhibit hall talking with fellow BI enthusiasts, I did have a chance to wonder over to the SAP Pods.

One of the most interesting items that SAP was showcasing was the upcoming enhancements to Web Intelligence and the BI Launchpad in SAP BusinessObjects 4.2 SP4. For those that do not know, Web Intelligence will receive an alternative viewing interface in 4.2 SP4.

The interface is HTML 5 based and will be optimized for use with touchscreen, tables and even large display smartphones. The looks and feel was very reminiscent of SAP Lumira, Design Studio and most SAP UI5 / Fiori interfaces from SAP. Items such as input controls, page navigation, document navigation, sorting, filter were all updated and enhanced for touch-based navigation. Again, it an alternative viewing interface meaning that the current look and feel interfaces are also available in the legacy BI Launch Pad.

With this in mind, it appears that the new WebI interface will only be available within the new “alternative” BI Launchpad. The new BI Launch Pad is reminiscent of many of the new Fiori Tile-based interfaces we see in SCN, SAP HANA and SAP Fiori applications.

It too is an alternative BI Launch Pad meaning that the legacy launchpad is still available. From my view point, I can attest that I was quite excited to see these enhancements. This modernization will likely make viewing WebI reports on touch screen enabled devices a delight. It also gives a more dashboard-esque look and feel when viewing WebI reports An overall merger of Web Intelligence’s powerful reporting engine with a modern analytics html 5 interface. Hopefully SAP will publish more information about the changes with a demo soon. 4.2 SP4 is estimated to be available sometime in the first quarter of 2017 so we will not have to wait long.

How might this change the rules of the game? If you look back at my blog posting from 2013 titled “There is still life for Web Intelligence in 2013” you might get a better understanding of what I am about to conclude. If we can use the powerful reporting engine of WebI to access data and present users with an awesome ad-hoc dashboard like interface, wouldn’t Web Intelligence change the game for SAP? Powerful, simple to use and modern I can’t wait and I think users will absolutely love what they see.

On Thursday, May 05, 2016 @ 2:00 PM EST Please join me as as I present an upcoming webinar on the SAP HANA security model. Overview Although all systems have to deal with authentication, authorization and user provisioning, SAP HANA deviates from typical database platforms in the amount of security configuration that is done inside the database. Join Jonathan Haun, co-author of “Implementing SAP HANA”, for expert recommendations on configuring SAP HANA and setting up the proper security models for your SAP HANA system. Learning Points • Get best practice strategies to properly provision users, manage repository roles, and implement a manageable security model in SAP HANA • Gain an overview of the 4 different types of privileges in SAP HANA • Explore how third-party authentication can integrate with SAP HANA • Find out how to provide selective access to data using analytic privileges in SAP HANA Register or review a recorded version.

It has been some time since I last posted a blog about SAP BusinessObjects. In part that was due to a lack of major changes in the SAP BusinessObject platform. A few Support Pack were released but there were only a handful of changes or enhancement that caught my attention. In addition to this, I am also excited to now be a part of the Protiviti family. In November of 2015, the assets of Decision First Technologies were mutually acquired by Protiviti. I am now acting as a Director within the Protiviti Data and Analytics practice. I look forward to all the benefits we can now offer our customers but unfortunately the acquisition transition required some of my time.

Now that things are settling, I hope to focus more on my blogging. Now let’s get to the good parts With the release of SAP BusinessObjects 4.2 SP2, SAP has introduced a treasure trove of new enhancements. It contains a proverbial wish list of enhancements that have been desired for years. Much to my delight, SAP Web Intelligence has received several significant enhancements. Particularly in terms of its integration with SAP HANA. However, there were also enhancement to the platform itself.

For example, there is now a recycling bin. Once enabled, user can accidentally delete a file and the administrator can save the day and recover the file. Note that there is a time limit or a configured number of days before the file is permanently deleted. Let’s take a more detailed look at my top 11 list of new features. • Web Intelligence – Shared Elements This is the starting point for something that I have always desired to see in Web Intelligence. For many years I have wanted a way to define a central report variable repository.

While it’s not quite implemented the way I desired, within the shared elements feature, the end results is much the same. Hopefully they will take this a step further in the future but I can live with shared elements for now. With that said, developers now have the option to publish report elements to a central located platform public folder. They can also refresh or re-sync these elements using the new shared elements panel within Webi. While this might not seem like an earth shattering enhancement, let’s take a moment to discuss how this works and one way we can use it to our advantage. Take for example, a report table.

Within that report table I have assigned a few key dimensions and measures. I have also assigned 5 report variables. These variables contain advanced calculations that are critical to the organization.

When I publish this table to a shared elements folder, the table, dimensions, measures and variables are all published. That’s right, the variables are published too. Later on I can import this shared element into another report and all of the dimensions, measures and variables are also imported with the table. One important functionality note is that Web Intelligence will add a new query to support the shared elements containing universe objects. It does not add the required dimensions and measures to any existing query. This might complicate matters if you are only attempting to retrieve the variables. However, you can manually update your variable to support existing queries.

If you have not had the epiphany yet, let me help you out. As a best practice, we strive to maintain critical business logic in a central repository.

This is one of many ways that we can achieve a single version of the truth. For the first time, we now have the ability to store Web Intelligence elements (including variables) in a central repository. Arguably, I still think it would be better to store variables within the Universe. However, shared elements are a good start. Assuming that developers can communicate and coordinate the use of shared elements, reports can now be increasingly more consistent throughout the organization.

I don’t want to underscore the other great benefits of shared elements. Variables are not the only benefit. Outside of variable, this is also an exceptional way for organization to implement a central repository of analytics. This means user can quickly import frequently utilized logos, charts and visualizations into their reports. Because the elements have all their constituent dependencies included, user will find this as an excellent way to simplify their self-service needs.

I find it most fascinating in terms of charts or visual analytics. For the casual user they don’t need to focus on defining the queries, formats, and elements of the chart. They can simply import someone else’s work. • Web Intelligence – Parallel Data Provider Refresh I was quite pleasantly surprised that this enhancement was delivered in 4.2. In the past, Web Intelligence would execute each query defined in a report in serial. If there were four queries and each required 20 seconds to execute, the user would have to wait 4 x 20 seconds or 80 seconds for the results. When queries are executed in parallel, users only have to wait for the longest running query to complete.

In my previous example, that means that report will refresh in 20 seconds not 80 seconds. Keep in mind that you can disabled this feature. This is something you might have to do if your database can not handle the extra concurrent workload.

You can also increase or decrease the number of parallel queries to optimize your environment as needed. • Web Intelligence – Geo Maps Maps in Web Intelligence?

Yes really, maps in Web Intelligence are no longer reserved for the mobile application. You can now create or view them in Web Intelligence desktop, browser or mobile. The feature also include a geo encoder engine. This engine allows you to geocode any city, state, country dimension within your existing dataset.

Simply right click a dimension in the “Available Objects” panel to “Edit as Geography” A wizard will appear to help you geo encode the object. Note that the engine runs within an adaptive processing server and the feature will not work unless this service is running.

I found this true even when using the desktop version of Web Intelligence. • Web Intelligence – Direct access to HANA views. For those that did not like the idea of creating a Universe for each SAP HANA information view, 4.2 now allows the report developer to directly access a SAP HANA information view without the need to first create a universe. From what I can decipher, this option simply generates a universe on the fly. However, it appears that metadata matters. The naming of objects is based on the label column names defined within SAP HANA. If you don’t have the label columns nicely defined, your available objects panel will look quite disorganized.

In addition, objects are not organized into folders based on the attribute view or other shared object semantics. With that said, there is an option to organize dimension objects based on hierarchies defined in the HANA semantics. However, measures seem to be absent from this view in Web Intelligence (relational connections) which makes me scratch my head a little. Overall, I think it’s a great option but still just an option.

If you want complete control over the semantics or how they will be presented within Web Intelligence, you still need to define a Universe. • Web Intelligence – SAP HANA Online Let me start by saying this, “this is not the same as direct access to HANA views”. While the workflow might appear to be similar, there is a profound difference in how the data is processed in SAP HANA online. For starters, the core data in this mode remains on HANA. Only the results of your visualization or table are actually transferred. In addition, report side filters appear to get pushed to SAP HANA.

In other modes of connecting to HANA, only query level filters are pushed down. In summary, this option provides a self-service centered option that pushes many of the Web Intelligence data process features down to HANA. As I discovered, there are some disadvantages to this option as well.

Because we are not using the Web Intelligence data provider (micro cube) to store the data, calculation context functions are not supported. The same is true of any function that leverages the very mature and capable Web Intelligence reporting engine.

Also, the semantics are once again an issue. For some reasons, the HANA team and Web Intelligence team can’t work together to properly display the Information view semantics in the available objects panel.

Ironically, the semantics functionality was actually better in the 4.2 SP1 version than in the GA 4.2 SP2 release. In 4.1 SP1, the objects would be organized by attribute view (Same for Star Join calculation views). Webi would generate a folder for each attribute view and organize each column into the parent folder. In 4.2 SP2 we are back to a flat list of objects. In your model has a lot of objects, it will be hard to find them without searching. I’m not sure what’s going on with this, but they need to make improvements.

For large models, there is no reason to present a flat list of objects. Regardless of these few in number disadvantages, this is a really great feature.

It truly has more advantages than disadvantages. User gain many of the formatting advantages of Webi while also leveraging the data scalability features of SAP HANA. As an added bonus, the report side filters and many other operations are pushed down to SAP HANA which makes reporting simple. User do not have to focus so intently on optimizing performance with prompts and input parameters. • IDT – Linked Universes We can finally link universes in IDT.

UDT (Universe Design Tool) had this capability for many years. I am not sure why it was not included with IDT (Information Design Tool) from the beginning. How To Program A Virus In Python. That’s all I have to say about that • IDT – Authored BW BEx Universes As proof that users know best, SAP relented and we can now define a Universe against a BEx query. No Java Connector or Data Federator required. This option uses the BICS Connection which offers the best performance. To me this all boils down to the need for better semantics integration. The same is also true of SAP HANA models.

Having the ability to define a UNX universe on BEX queries has a lot to do with the presentation and organization of objects. For some strange reason users really care about the visual aspects of BI tools (and yes that last statement was sarcasm). There are also option to change how measures are delegated. Measure that do not require delegation can be set to SUM aggregation. Fewer and fewer #ToRefresh# warnings I hope • Platform – Recycle Bin One of the more frustrating aspects of the platform over the last few decades was the inability to easily recover accidentally deleted items.

In the past, such a recovery required 3 rd party software or a side car restore of the environment. If you didn’t have 3 rd party software or a backup, that deleted object was gone forever. Once enabled, the Recycle Bin will allow administrators to recover deleted objects for a configurable amount of time.

For example, we can choose to hold on to objects for 60 days. Only public folder content is support as well and user’s cannot recover objects without administrator help. However, this is a great first step and a feature that has been needed for over a decade. • Platform – LCMBIAR files Well it only took a few years but we can finally selectively import objects contained in an LCMBIAR file within the promotion management web application. Ironically I really wanted this functionality a few years ago to help with backups. Specifically public folder backup to aid users that accidentally deleted objects. Now that SAP designed the recycle bin, there is less of a need for this solution.

Prior to the latest enhancement, you had to import all objects in an LCMBIAR file. If I only wanted 1 of 8000 objects in the file, I had to import it into a dedicated temporary environment and then promote just the needed objects into the final environment.

• Platform – Split Installer Using the installation command line, administrators can now prepare the system for installation without down time. It does this by performing all of the binary caching and some of the SQL lite installation database operations before it invokes the section of the install that require down time. Running setup.exe –cache will invoke the caching portions of the install.

When ready to complete the install, running setup.exe –resume_after_cache will complete the insulation. In theory, the later portion of the installation step reduces the down time by eliminating the dependency between the two major installation and upgrade tasks. In large clustered environment this is a great addition. In all types of environments this is a great enhancement. Previously the system down time was subjected to the long and tedious process of caching deployment units. • Platform – New License Keys After you upgrade to 4.2 SP2, you will need to obtain a new license keys.

The keys that once worked for 4.0 and 4.1 will not work after upgrading to 4.2 SP2. The graphical installer will also let you know this. Be prepared to logon to service market place and request new keys. More Information and links I only mentioned what I thought to be the most interesting new features.

However there are several other features including in 4.2 SP2. The following links contain more information about the new features in SAP BOBJ 4.2 SP2. Checkout the latest SAP Insider book SAP HANA provides speedy access to your data, but only if everything is running smoothly. This anthology, a collection of articles recently published in SAP Professional Journal, contains tips and best practices on how to ensure that your data is highly available despite any system mishap or disaster that may occur. For example, Dr. Bjarne Berg shows you how to create a standard operating procedure (SOP) checklist that contains the daily operations, weekly jobs, and period upgrades and patches needed to keep your SAP HANA system up and running. Jonathan Haun calls on his extensive experience with SAP HANA to give you an overview of its disaster recovery (DR) and high availability (HA) options.

In a second article, he provides advice on the backup and restore process. And in his third piece, he explains how you can protect your data using SAP System Replication. Security expert Kehinde Eseyin lists 25 best practices for preventing data loss, while Rahul Urs gives an in-depth look at SAP HANA’s security functions. Ned Falk follows up on one of Kehinde’s tips, explaining how to set up the system usage type feature.

The feature lets you know if you are in a production versus a test system, preventing you from inadvertently causing a database issue. Irene Hopf gives an overview of best practices in a data center to ensure business continuity. Finally, we offer you two bonus articles that give you a taste of other SAP HANA topics covered in SAP Professional Journal. These are written by Christian Savelli, Dr. Berg, and Michael Vavlitis. Table of Contents Protect Your SAP HANA Investment with HA and DR Options 3 by Jonathan Haun, Consulting Manager, Business Intelligence, Decision First Technologies Implementing an SAP HANA system involves more than just selecting a standalone server.

Organizations must also consider the different options available in terms of high availability (HA) and disaster recovery (DR). Depending on the organization’s service-level requirements, multiple servers, storage devices, backup devices, and network devices might be required. This article gives an overview of the different ways organizations can achieve SAP HANA HA and DR.

Equipped with this information, you can develop the right SAP HANA architecture and business continuity strategy more easily. Mastering the SAP HANA Backup and Restore Process.11 by Jonathan Haun, Consulting Manager, Business Intelligence, Decision First Technologies When organizations implement SAP HANA, they need to devise a strategy to protect the data that the system manages in memory. Depending on your SAP HANA use case, failure to protect this data can lead to significant monetary or productivity losses. One way to protect the data is through the implementation of a backup and restore strategy.This article helps to fortify your general knowledge of the SAP HANA backup and restore process. It also discusses a few key questions that should be asked when devising a backup strategy for SAP HANA. Prevent Data Disaster in Your SAP HANA System Landscape with Effective Backup and Restore Strategies 28 by Kehinde Eseyin, Senior SAP GRC Consultant, Turnkey Consulting Ltd.

Become acquainted with concepts, best practices, optimal settings, tools, and recommendations that are invaluable for SAP HANA database backup and restore operations. SAP HANA’s System Usage Type: Use This Simple Setting to Prevent Major Mistakes in Your Production System.50 by Ned Falk, Senior Education Consultant, SAP Learn the steps needed to configure the SAP HANA Support Package 8 system usage type feature to enable warnings when the SAP system thinks you’re doing things you should not be doing in your SAP HANA production system. No, Your SAP HANA-Based Data Center Is Not as Simple as a Toaster56 by Irene Hopf, Global Thought Leader for SAP Solutions, Lenovo Learn how to set up business continuity in SAP HANA with high availability, disaster recovery, and backup/restore concepts. This overview shows the challenges of managing SAP HANA-based applications in the data center.

How SAP HANA System Replication Protects Your Data.62 by Jonathan Haun, Consulting Manager, Business Intelligence, Decision First Technologies When organizations implement SAP HANA, they need to devise a strategy to protect the data that the system manages in memory. Depending on your SAP HANA use case,failure to protect this data can lead to significant monetary or productivity losses. One way to protect the in-memory data is through the implementation of SAP HANA System Replication. SAP HANA System Replication is a solution provided by SAP that works with all certified SAP HANA appliance models.

It also works with Tailored Datacenter Integration (TDI) configurations if you build your own system using TDI rules. Tips on How to Secure and Control SAP HANA.75 by Rahul Urs, GRC Solutions Architect, itelligence, Inc.

Learn how to implement security and controls in SAP HANA and understand key areas of SAP HANA security, such as user management, security configuration, security and controls for SAP HANA, security flaws, and common oversights. Avoid SAP HANA System Surprises with a Standard Operating Procedure Checklist.81 by Dr.

Bjarne Berg, VP SAP Business Intelligence, COMERIT, Inc., and Professor at SAP University Alliance at Lenoir Rhyne University Keep your SAP HANA system up and running with these preventive measures and tips for regular monitoring of system activities. Bonus Article: Complement Warm Data Management in SAP HANA via Dynamic Tiering.105 by Christian Savelli, Senior Manager, COMERIT, Inc. This bonus article describes the Dynamic Tiering option available starting with SAP HANA Support Package 9, which enables a more effective multi-temperature data strategy. It offers management of warm data content via the extended table concept, representing a major enhancement from the loading/unloading feature currently available. The main benefit offered is more control over the allocation of SAP HANA main memory and corresponding mitigation of risks associated to memory bottlenecks. Bonus Article: SAP HANA Performance Monitoring Using Design Studio113 by Dr.

Bjarne Berg,VP SAP Businesss Intelligence, COMERIT, Inc., and Professor at SAP University Alliance at Lenoir Rhyne University,and Michael Vavlitis, SAP BI Associate and Training Coordinator, COMERIT, Inc. This article is a sampling of the content for system administrators you can find in SAP Professional Journal. Learn the details around the process of developing and implementing SAP HANA performance monitoring using an application built by SAP BusinessObjects Design Studio. Active performance monitoring is a vital measure formaintaining the stability of an SAP HANA system, and Design Studio offers the ideal capabilities to track the most important SAP HANA performance indicators. This posting is a little “late to the press” but I thought I would follow tradition and post a what’s new for SAP BusinessObjects 4.1 SP5. Most of the important details are listed in the on help.sap.com.

However I did find that a major enhancement had been implemented in the SAP BusinessObjects Explorer 4.1 SP5 plugin. From what I can tell, this enhancement was not listed in the “What’s New” guide.

I was actually notified of the enhancement through ideas.sap.com. So what is the major enhancement? Drumroll pleaseyou now have a few formatting options when defining calculated measures within an SAP Explorer Information Space.

This includes the ability to define the number of decimal places and Currency Symbol Previously you only had formatting options when your information space was based on a Universe. The Information Space would inherit the formats defined on the measures derived directly from the Universe. However, calculated measures (those defined in the information space) had no formatting options. This was of particular importance when BWA or SAP HANA were the prescribed data source (IE No Universe was utilized). One of the most common KPI’s used by business is% Change.

To calculate percent change in Explorer, you have to first define its numerator and denominator as separate measures in the source. Using a calculated measure, you could then perform the division to produce a raw change ratio number. Without the ability to format the number in the presentation layer, business users had to view the measure in its raw decimal format. For example, they would see.3354 in an Explorer Chart or table. What they really desired was the ability to view the measure formated as 33.5%. While this might seem like a trivial feature, I can attest that multiple customers found this to be a huge issue.

Business user want to see the KPI in “%” format. “That is how it has always appeared in previous tools”. Fortunately SAP finally added the feature in SAP BusinessObjects Explorer 4.1 SP5. There are a few other changes in SP5 as well.

You can now define Web Intelligence customizations on the User Group and Repository folder level. This means that you can pick and choose the Web Intelligence UI buttons that are visible based on group membership or folder location. Unfortunately it is now impossible to figure out the forward fit plan for 4.1 SP5.

SAP Note tries to explain the new forward fit process but your guess is as good as mine. Maybe this posting will get some feedback explaining how to determine the forward fit for a patch or support pack? What are we joining? When you design an SAP HANA information view, we must also define joins between tables or attribute views.

These joins can be configured in the foundation of attribute views and analytic views. They can also logically occur in analytic and calculation views. Why do joins matter? In some cases, joining tables can be an expensive process in the SAP HANA engines. This is especially true when the cardinality between tables is very high.

For example, an order header table with 20 million rows is joined to an order line detail table with 100 million rows. Excessive joining in the model is also something that can lead to performance issues. Excessive joining is something that occurs often when we are attempting to denormalized OLTP data using SAP HANA’s information views. What is the solution to work around join cost? Join pruning is a process where SAP HANA eliminates both tables and joins from its execution plan based how the joins are defined in the information view and how data is queried from the information view.

For example, let’s assume that we have a customer table or attribute view configured with a left outer join to a sales transaction table in our analytic view. If we query the information view and only select a SUM(SALES_AMOUNT) with no GROUP BY or WHERE clause, then the execution plan might skip the join process between the customer table and the sales transaction table. However, if we execute SELECT CUSTOMER_NAME, SUM(SALES_AMOUNT) then the join between the customer table and sales transaction will be required regardless of the join type used. The process is subjective and related to how the joins are configured in our information view. It is also subjective to how the information view is queried. If every query we execute selects all attribute and measures, then join pruning would never be invoked.

Fortunately, in the real world, this is not typically the case. Most queries only select a subset of data from the information view. For this reasons, we should try to design our information views to take advantage of join pruning. What join options do I have? There are five main types of joins to look at in this context.

Inner Joins, Referential Joins, Left Outer, Right Outer and Text Joins. Below is an outline on how SAP HANA handles each join type. INNER JOIN Inner Joins are always evaluated in the model. No join pruning occurs because an inner join effectively tells the SAP HANA engine to always join no matter what query is executed.

Records are only returned when a matching value is found in both tables. Overall they are one of the most expensive join types that can be defined in an information view. They also have the potential to remove records from the results assuming that referential integrity is poorly maintained in the source tables. REFERENTIAL JOIN For all intents and purposes, the referential join acts like an inner join. However, under specific circumstances, the referential join will be pruned from the execution of the information view.

Assuming that there are no filters defined in an attribute view’s foundation, the SAP HANA engines will likely prune the join from the execution plan of the analytic view. This assumes that no columns are queried within the same attribute view. However, if a column from the attribute view is included in a query, this join will act as an inner join. Let me reiterate the exception to the pruning rule one more time. If filters are defined in the attribute view, the join will be included and enforced as an inner join even if no attribute view columns are selected. This means that records are only returned when a match is found in both the attribute view and analytic view foundation table.

It also means that referential joins are not always pruned from the information view execution plan. Pay close attention to this exception because referential joins can be just as expensive as inner joins. When a referential join is pruned we have to make sure that the referential integrity between tables is sound. If it is not, we might get varying results based on the columns selected in our queries. For example, a transaction might not have a shipped date defined. When we query for transactions with a shipped date, this transaction would be excluded. When we exclude any shipped date attributes from our query, the transaction would magically appear again because of join pruning.

Based on experience, this can confuse the heck out of an end user or a developer 🙂 LEFT OUTER Based on several query scenarios that I tested, the left outer join is always pruned from the execution plan assuming that no columns are selected from an attribute view defined within the model. Left outer joins are often defined in instances where the analytic view foundation table is known to have no matching record within an attribute view. By design, they prevent the model from excluding transactions. This is because it will ensure that all possible transactions are returned, even if we are missing master data. Overall I would say that the left outer join has the potential to be the best performing join within our information view definition. However, we have to make sure that it makes sense before we arbitrarily set up our models with left outer joins.

We sometime need the inner join to help exclude records. For example, let’s assume that we have a date attribute view joined to a data column in our analytic view foundation table. Using an inner join we can exclude any transaction that has no defined date.

There are other instances where we might find phantom transactions in our aggregates with a left outer join. For example, a transaction that has no customer, date or product. In those cases, something was likely only partially deleted from the system. Therefore, referential integrity issues can create problems.If you recall a similar issue can happen with referential joins. However, with left outer joins we will always return the transaction. RIGHT OUTER The right outer join is used in instances where all attribute view values need to be returned even if they have no matching transactions. For example, I need a complete list of all customers even if they have never been issued an invoice.

This would be helpful if the query were targeting both existing and potential customers. Keep in mind that a mismatched transaction will be excluded from any analytic view’s aggregates. Based on my testing, a right outer join is always performed in the execution of the model. I was not able to devise a single scenario where the join was pruned from the execution of the model. With this in mind, it will have a high cost when used in the information view definition. TEXT JOIN This is effectively an inner join setup specifically to work with the SPRAS field found in SAP BusinessSuite tables. Based on my brief testing, it is always executed and never pruned from the model.

A few weeks ago SAP released SAP BusinessObjects 4.1 Support Pack 4 (SP4). This package introduced a few new features which are listed in the “What’s New guide”.

It also introduced compatibility or support for s few new items. There is not much to talk about so ill keep in brief. New Feature (Highlights) • The translation manager now supports Analysis for OLAP documents.

• A few Web Intelligence charting enhancements • You can now schedule BEx based Web Intelligence reports where the BEx query contains dynamic variables. Supported Platforms (Highlights) • Support for Microsoft SQL 2014 • Support for Oracle 12c • Support for SAP HANA SPS 08 • Official support for Adobe Flash Player 12 Links Forward Fit information Contains the fixes found in the following releases 4.1 SP 3.1 4.1 SP 2.3 4.1 SP 1.7 4.0 SP 9.1 4.0 SP 8.5 4.0 SP 7.9 4.0 SP 6.12. If you are active in the SAP ecosphere and you have somehow managed to never see or hear anything about SAP HANA, I can only conclude the following. You obviously live under a rock in the deepest darkest forest on a planet in a distant galaxy where SAP has yet to establish a sales territory. All joking aside, it is very unlikely that SAP HANA has not been at the forefront of most SAP related discussion in recent years. For those that know about SAP HANA, I would bet that your first thought is always related to one word. That word is “fast”.

SAP HANA is an in-memory, columnar store and massively parallel analytical data processing engine. For the average business intelligence (BI) consumer this conglomeration of adjectives and technical terms mean one thing; it’s fast. If we accept that SAP HANA is fast, we have to ask ourselves what do I gain with speed? We then have to ask is speed alone a primary reason to purchase SAP HANA? What do I gain with speed?

Below I will list a few of the more obvious reasons that speed maters. There are other reasons not listed but this will help start the thinking process. Productivity It is very easy to argue that we are more productive when we spend less time waiting for software to respond. In the golden oldie days of BI, we would often endure a query that required 30 + minutes to execute.

That was 30 minutes of time we had to do something else. I like to call these queries the “coffee break queries”. This is because we often use this time to go get coffee. If we can reduce these queries to seconds with SAP HANA, users will be more likely to stay engaged and remain productive. Depth Speaking of 30 minute queries, can you imagine how unwilling a consumer would be to explore their data if every perspective change required a 30 minute wait time? My experience is that users quickly loose interest in exploring their data when the software is too slow to respond.

If we could reduce these queries to seconds, users will be more likely to not only be productive but to also dig a little deeper into their data. Think of SAP BusinessObjects Explorer running on SAP HANA. Users can take a billion row dataset and explore it from multiple perspectives. Because of speed, we can now gain better depth into our data. Data Loading When we think of speed, we often focus on the consumer’s experience. However, daily IT processes can also be accelerated with SAP HANA due to the increased data loading capabilities.

If your legacy data load process required 14+ hours, most SAP HANA solutions will likely reduce that by more than 50%. This gives IT departments more time to recover from data load failures or to extend the scope of the data set. It also provides the data consumer with a greater chance that their data will be available the next day. Competitive Edge As a general rule, if we can outthink and respond faster to a changing economic climate, we often gain a competitive edge over our competition. In some ways, SAP HANA can helps us do that. SAP HANA cannot think for us but it can help us discover trends, identify changes, understand our successes, identify our failures and accelerate other areas of our business. Most organizations can already do all of these things effectively with their current BI environments.

However, imagine how much better it will be when it happens faster with larger and more complex datasets. Customer satisfaction Another competitive advantage for organizations is their ability to service their customers. Speed can help an organization better understand their customer by instantly analyzing past history and predicting future needs.

This can be done quickly and on the fly when we have speed. With that advantage we can start embedding sophisticated analytics in our frontend applications.

Take for example a call center. If we enter the customer’s details into a system, we can then have a series of analytics pop-up that help us understand the customer relationship to our organization. We can also suggest products and services they might be interested in based on these analytics. This is all achievable because of speed.

Is speed alone the main reason for purchasing SAP HANA? Query and processing speeds alone are not the only reasons to purchase SAP HANA. There is more to SAP HANA than just speed.

Speed allows an organization to achieve technical wonders but behind the speed is a process. There must be a process for obtaining data that is consistent, repeatable and reliable.

For years we have known this as the Enterprise Information Management (EIM) process. Using processes and tools, organizations can obtain data, model it into usable structures and then load it into a database for querying. The process also helps other initiatives such as data governance, data quality and a “single version of the truth”. The down side to the processes is that obtaining new data is often seen as slow and cumbersome by the data consumers.

They often have to wait weeks or months before data is available to them. This happens for a variety of reasons. IT resources often have to first find the data, develop a processes to capture it on a recurring basis and then deal with any gaps or inconsistencies. That is not necessarily a problem that can be overcome with any level of speed or technology. The hope is that these complexities subside after a strong data governance program is instituted. One where the organization begins to manage data as an asset as opposed to a byproduct. In short, only processes and management can fix part of the EIM problem.

However, there is another side to EIM that has evolved around the needs of the data consumer. There is more to data than just storing it in a Kimball / Inman star schema of tables.

Users have to be able to construct or access queries that can answer relevant business questions. If we solve these problem with the EIM process alone, we often end up with extra aggregate tables, custom fact tables or a variety of different tables formulated to help query processing. Unfortunately, these extra tables add more and more time to the recurring data update process. As the processing time and complexity of managing these processes increases, the data consumer becomes more and more impatient.

With the traditional RDBMS, these extra tables are also sometimes required because of the inefficiencies of the row store and spinning disk. With SAP HANA, we can begin rethinking this strategy on all fronts. SAP HANA’s speed helps us reshape this strategy but there is more to this story than speed. Let’s not forget the SAP HANA also has something special built-in to its data platform.

SAP HANA has multidimensional models built directly into the database. By name they are called attribute views, analytic views and calculation views. In a generic since, they act as a semantic layer. They are often called information views or information models. They act as a layer that exists between the database tables and the data consumers. They provide easy, consistent and controlled access to the data.

In a traditional BI landscape, this semantic layer is typically separated from the RDBMS. However, with SAP HANA it is directly integrated.

In some BI products, this layer acts as a separate data store. Take for example the traditional OLAP cube. OLAP cubes, using MOLAP storage, not only store the metadata but they also store the actual data. They act like a supercharged BI database but they require extra time to load. Then there are technologies such as the SAP BusinessObjects universe. It takes a ROLAP approach where data is kept in the database and only metadata is stored in the semantic layer.

This cuts down on the data load times but the semantic layer interface is typically proprietary. This means its not universally possible for most BI tools to access the semantic layer. In most cases the same is true of the OLAP cube because not every BI tool has an interface to its data. The MOLAP and ROLAP methodologies both have multiple benefits which are beyond the scope of this posting. However, one thing is clear with the legacy semantic layer.

It has traditionally been separated from the RDBMS and it often only works with a limited set of BI tools. SAP HANA is different, though. To understand how it is different lets answer a few questions. One, how does SAP HANA help the overall EIM process? Two, how do we leverage the SAP HANA multidimensional information models to aid consumers?

SAP HANA helps the overall EIM process by eliminating the need to create special use tables and pre-aggregate tables. With SAP HANA, these tables can be converted into logical information views and accessed with SQL.

By eliminating these persistent tables, we are reducing the time required to execute the recurring data update process. These same information views also help IT to make changes faster. By reducing the number of physical tables in the model, IT developers are able to make changes without the need to also update data found in downstream tables. The basic idea is that logical information views are more agile than maintaining the equivalent physical table. If we assume that agility is an important benefit of SAP HANA, we must also consider relevancy.

Getting content to the consumer quickly is part of the battle. We also have to make sure that the content is relevant. This means that we need to make sure that the content helps the consumer answer relevant questions.

This is also an area where the information view helps the consumer. Developers can create custom logical models that contain relevant data attributes and measures. Because they are embedded into the RDBMS and centrally located, these views can also be accessed by any tool that supports the SAP HANA ODBC, JDBC or MDX drivers. This helps an organization to maintain a single version of the truth while simultaneously providing an agile and relevant foundation for the consumption of data. The above figure helps to illustrate this benefit. Starting at the bottom we wrap the entire process into the Data Quality and Data Governance umbrella. This means that these processes govern everything we do in SAP HANA.

We then focus on how the data gets into SAP HANA tables. This can be a daily batch Extract Translate Load (ETL) processes with SAP Data Services or a daily delta load using the BW extractors into a BW on HANA instance.

We also have to look at the Extract Load Translate (ELT) processes. This includes instances were SAP Landscape Transform (SLT), SAP Replication Services or SAP Event Stream Processor (ESP) is used to provision data, in real-time, into SAP HANA. As an added bonus we can also add BI solutions built into SAP Business Suite on HANA in this area. The provisioning solution that is used at this layer all depends on the data consumer’s requirements and the organization’s needs.

However, the goal at the data provisioning layer is to minimize the amount of data duplication and eliminate the need for subsequent processing into additional tables. Data should be stored at its lowest level of granularity in either a normalized or denormalized fashion. Ideally it is only stored in a single location that can be reused in any information model. For example, this means we can focus on developing the base FACT and DIMENSION tables, DSOs or replicating normalized ECC tables. Anything that is required above this layer should be logical. This is not to say that we can facilitate all needs with logical models, but it should be the preferred methodology to facilitate an agile foundation.

Above the physical table layer we also have what I am calling the “Business View” layer. This is the layer where we achieve two goals. One, we try to do the bulk of specialized transformations and calculations at this layer. The SAP HANA information views are logical and do not require the movement of data. This makes them agile when changes are required. Second, we try to construct views that facilitate the needs of the data consumer.

By maintaining relevant views, data consumers will be satisfied productive decision makers. At the top of the diagram we have the SAP BusinessObjects BI tools.

Ideally we would use SAP BusinessObjects but other 3 rd party tools can also be considered. Regardless of the type of tool, SAP HANA information views should be the primary point of access.

This is because they should contain all of the relevant transformations, security and calculations. Again, they are centralized which helps foster a single version of the truth throughout the organization. In summary, beyond speed there are two other fundamental benefits of SAP HANA.

Agility and Relevancy are also benefits of SAP HANA. Regardless of the SAP HANA solution we choose, agility and relevancy can be leveraged. They might be implemented in slightly different ways but the goal should remain the same. As an added benefit we can also expect great performance (in other words “Speed” helps too). Many hardware vendors are on the verge or have already released their new certified SAP HANA servers based on the Intel Ivy Bridge v2 CPU and related chip sets. So what does this actually mean to the consumer?

• A faster RAM chip means faster query response time. The Ivy Bridge v2 CPU support DIMMS based on the DDR3-1066, 1333 or 1600 MHz architecture. The previous generation of servers only supported DDR3 memory clock speeds of 800, 978, 1066, 1333 or 1066. Faster clock speeds mean faster seek times. The net result is a faster SAP HANA system.

• A faster CPU clock also means faster query response times. The certified SAP HANA systems running the Ivy Bridge v2 CPU support clock speeds of 2.8 GHz with turbo speeds of 3.4 GHz. The previous generation of E7 CPU operated at 2.4 GHz with turbo speeds of 2.8 GHz. A faster clock means fewer wait cycles and a faster SAP HANA system.

• The Ivy Bridge v2 CPU supports 15 cores per CPU socket. This allows SAP HANA to perform more parallel calculations which results in faster speeds and greater workloads. There are more benefits as well: • This allows hardware vendors to supply customers with a 4 sockets (60 core) server that supports 1TB of RAM. Unless something recently changed, SAP has always maintained that the ratio between RAM and CPU core needs to be around 16GB per Core for analytical solutions. The previous generation of 10 core CPUs could only achieve his with an 8 socket motherboard. Because Ivy Bridge V2 supports 15 cores per socket, vendors can now operate 4 socket SAP HANA systems that host 1 TB of RAM.

In general this will help reduce the cost of the 1TB server. 8 socket chassis and motherboards are generally very expensive. A 4 socket alternative will likely be less expensive.

• For S0H (Suite on SAP HANA) the ratio is different. In general 32GB per core is supported. This means that a 4 socket (60 core) server can now host 2TB of RAM. This will also greatly reduce the cost of SAP HANA systems operating SoH. • yields a 2x increase in speed compared to the 1st generation of certified SAP HANA systems. However, I still think we need verify the performance as vendors try to support 1TB of RAM on the 4 socket architecture. If you think about it, the 1st generation 8 socket systems supported 80 cores.

The new 4 socket Ivy Bridge v2 systems support 60 cores. Their is a CPU/RAM ratio difference of 80/60 to 12.8GB/17.1GB (1024 GB / 60 or 80). Perhaps the faster memory clock speeds and other enhancements to Ivy Bridge v2 easily overcome any issues? I guess we will need to wait until official benchmarks are available before passing judgement. • Some vendors are able to build XS (Extra Small) through XL (Extra Large) SAP HANA systems on the same server chassis and motherboard platform.

This will allow organizations to scale up or add RAM and CPU’s as needed. The previous generation of servers were based on a variety of platforms. Often organizations would have to purchase a new server when they needed to scale up. • The logging partition appears to no longer require super fast PCIx Nand Flash cards?

The first generation of SAP HANA servers were all equipped with Fusion IO cards or RAID 10 SSD arrays. The 2nd generation appears to have relaxed the IOPS requirements. If this is truly the case, the storage cost for SAP HANA servers should also be greatly reduced.

The PCIx cards and SSD arrays are very expensive. If SAP HANA can operate its logging mechanism at optimal speeds, on a partition using more cost effective spinning disks, you should expect a less costly SAP HANA server. • Note: SAP HANA stores and accesses date in RAM. The disks only contain redundant information in case of a power failure. The only conceivable downside to a slower logging partition, is a scenario where bulk loading large chunks of data to SAP HANA is slowed.

It can also affect the SAP HANA startup time. When started, SAP HANA takes ROW data from disk and lazy loads COLUMNAR data as needed.

If the anything needs to be recreated from the logs at startup, I would assume that a faster disk is better. The new question is, “how much better”? • Note: I have an on why the logging partition no longer requires fast disks. Something must have changed?

SAP Certified Appliance Hardware for SAP HANA: SAP Certified Enterprise Storage Hardware for SAP HANA: ) SMP.